Beyond Hadoop: Big Data - Simple, Real-time and Fast.

By admin Technology With No CommentsAny intelligent fool can make things bigger and more complex...

It takes a touch of genius - and a lot of courage to move in the opposite direction. -E.F. Schumacher

Introduction

Big Data is typically defined as high Volume, high Velocity, and high Variety information assets that require new forms of processing to enable enhanced decision making, insight discovery and process optimization.

But most organizations don't really have "Big Data" (as in 100TB+). Save for a few Facebooks, Twitters and Amazons of this world, few organizations have petabytes of actionable data to process. Most of them _do_ need to process (and store) non-trivial data volumes, but the challenges of Big Data applications are more often found in other facets of Big Data processing.

The three "V"s - Rearranged

In addition to Volume, organizations need to be able to handle the ever-changing, real-time data (Velocity), as well as ingest variety of sources and formats of data and normalize it into harmonized models suitable for analytics (Variety). The focus of effort for most businesses will probably be prioritized as follows:

- Variety - most companies share this challenge, data can be structured, unstructured, in a variety different formats

- Velocity - many companies need Operational Excellence and Real-time Analytics of data streams

- - Low Transactional Latency - rapid processing of both stream and at-rest data.

- - Low Data Latency - rapid availability of a large amount of data at-rest and in-motion.

- Volume - few companies routinely process more than 100TB of actionable data

Business and Technical Requirements for Big/Fast Data software stacks

Modern Big/Fast Data software stacks need to be able to support all three Vs listed, but there are other, business criteria affecting adoption:

- Implementation time

- Return on Investment (ROI) & Total Cost of Ownership (TCO)

- Flexibility + Fit for the task at hand.

At the technical level, modern Big/Fast Data stacks need to provide the following:

- Architectural simplicity and elegance

- Low Transactional- and Data- Latency

- High-throughput, with up- and out- scalability

- Real-time, on-demand analytics

- Data Stream/Complex Event Processing

- SQL-like querying, ACID, transaction support

- Distributed logic Execution Framework

- High Availability and Fault Tolerance

- Stateless, decentralized, elastic cluster management

Hadoop is most likely not the right choice

Hadoop is almost synonymous with Big Data, coming with a lot of hype promising technological nirvana. But Hadoop is not the only game in town and most likely not the right choice. Organizations working on Big Data initiatives are advised to look past the hype and evaluate Hadoop versus other Big Data software stacks available, which, while less visible, may be more suitable for their business needs and provide a much higher ROI.

Looking at the requirements list above, it is clear that Hadoop actually does NOT meet many of them. Some shortcomings (such as lack of support for stream processing) can be somewhat remedied by tacking on additional frameworks, such as Apache Storm (at a significant cost, see "Questioning The Lambda Architecture"). Many other shortcomings (lack of simplicity, no support for on-demand queries) - can not. But the major deal-breakers appear to be Hadoop's complexity and implementation costs.

Created in 2005, Hadoop was heavily influenced by Google's web-indexing business. At its core, Hadoop still remains a batch-oriented, low-level scheduler/executor for distributed MapReduce tasks, with distributed flat-file storage, typically requiring several minutes (and up) to complete a simple task. Acceptable for a high-volume, low-velocity class of tasks, but, completely inadequate for a majority of business use cases requiring both Data and Transactional Velocity.

Data scientists agree: 76% data scientists participating in a survey felt Hadoop is too slow, and takes too much effort to program.

Contenders

There are several competing camps vying to replace Hadoop, in particular MPP and NoSQL databases. But there are a few problems with databases positioned as sole, all-encompassing tools for Big/Fast Data processing:

- SQL-like languages are declarative (not Turing-complete)

- Not all data is fit to be stored in a database, some types of data are better stored in a filesystem, etc.

- Even if a procedural language (a la PL/SQL) were provided, placing business logic in the data layer is NOT a good idea

The right choice

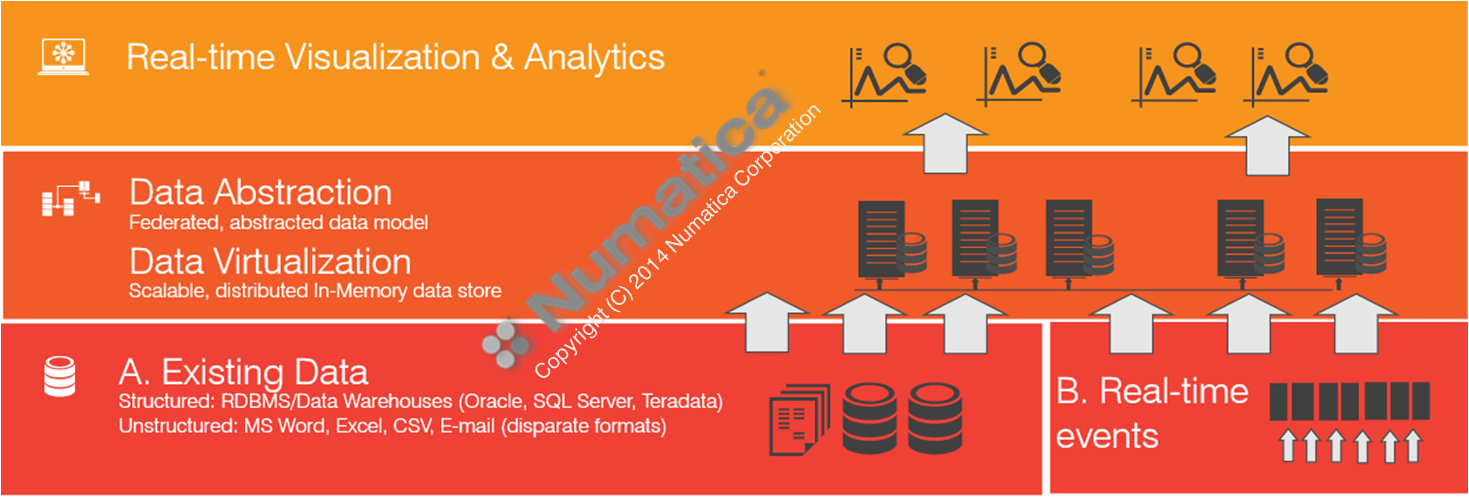

At Numatica, we are placing our bets on a next generation computing paradigm known as in-memory grid computing and we are building a software stack that is an order of magnitude less complex, faster and more flexible than Hadoop. The stack uses RAM as primary operational storage with pluggable backing/emergency storage using distributed file systems (HDFS, GlusterFS, etc.) and/or NoSQL and relational data stores. The stack implements an elastic, peer-peer architecture and incorporates a distributed code execution framework, which is simpler and more flexible than MapReduce. More details will be announced in the future, but a high-level conceptual processing model looks as follows:

Summary

In summary, Hadoop is only acceptable if you are a well-funded organization handling high volume (petabytes) of data and don't need on-demand analytics, or as a replacement for super-expensive and proprietary Data Warehouse products, such as Teradata. Businesses interested in implementing on-demand analytics or real-time data stream processing will benefit from alternative approaches.